Search engines

Could someone go three days without searching for any information?

This simple question was examined a few years ago by Google researchers, with anthropologists, sociologists, psychologists, etc., participating in the study.

As psychologist Dr. Brett Kennedy observed, from our earliest existence, humans constantly seek answers and try to find and improve themselves through them.

The internet—and searching on it—has been instrumental in this process, and now almost everything—information, products, ideas, etc.—is accessible everywhere via the internet.

As one participant in that study put it, access to information adds real value to life.

Being able to use web search is an excellent way to do much more in less time, to find what is needed immediately, to understand things, and to make better decisions about what matters. In short, it improves life.

Although the most popular search engine, Google Search, does not publish search statistics, it is estimated that about 5.6 billion searches are made every day (2019).

That means approximately 3.8 million searches per minute, or 63,000 per second.

Inevitably, searching in web engines is one of the most popular ways to find a website.

Or, the most popular way to find new customers and reach people for something offered.

Therefore, having search engines recognize and surface a page for the right query is a process to follow to have a chance of success with an online presence.

The reasons people search are grouped by Google into six core categories—or six basic human needs: to take action, to understand and learn, to belong, to enrich an experience, to self-improve, and to self-express.

According to Google, people use search for many reasons, from practical questions like “where can I buy these shoes?” to deeper, more philosophical ones like “who do I want to be?”.

Search engines are constantly evolving to provide the best answers to the questions posed, since only by returning good results will users continue to use and trust them, keeping them on their pages and showing ads—the ultimate goal.

To return the right results for entered queries, search engines try to understand intent behind them, not just the literal meaning of the words.

These intents fall into three primary categories, each best served by different result types:

- Transactional queries. Users seek an imminent transaction, e.g., buying a book or a smartphone.

- Navigational queries. Users seek guidance to a specific site or app, e.g., Skroutz, YouTube.

- Informational queries. Users seek specific information, e.g., “how to make coffee?” or “what are the symptoms of COVID?”

How do search engines work?

Simply put, their operation has three parts.

- Crawling is the process by which automated programs—crawlers or bots—scan the web from page to page, following links on known pages or using submitted URL lists, and record new pages or pages updated since the previous pass.

- Indexing is the process by which specialized programs visit pages whose addresses were acquired from Crawling, analyze the content, functionality, and even appearance, and try to understand what they are.

The information is then stored in an index (e.g., Google index), a massive database typically distributed across many machines worldwide. - Serving search results is the third and final stage.

Once a user searches a term on, say, Google Search, Google scans its database and ranks the results in its index based on factors such as the user’s location, language, device, prior searches, and of course the query itself.

For example, for “bike repair,” different results will be returned to a user in Athens than to one in Thessaloniki.

Each search engine may differ in structure and operation, but the fundamentals are roughly the same.

There are many search engines.

The most well-known and popular include Google Search, YouTube, Pinterest, Bing, DuckDuckGo, Baidu, Amazon, Skroutz, etc.

Each typically focuses on a particular type of search.

Regarding website search market share in Greece for December 2021, according to statcounter.com, Google is dominant (fig. 5.1), and that is where the focus will be for actions to get a website to rank well in Search results.

Figure 5.1: Search Engine Market Share, Greece, 12.2021 (source: https://gs.statcounter.com/search-engine-market-share).

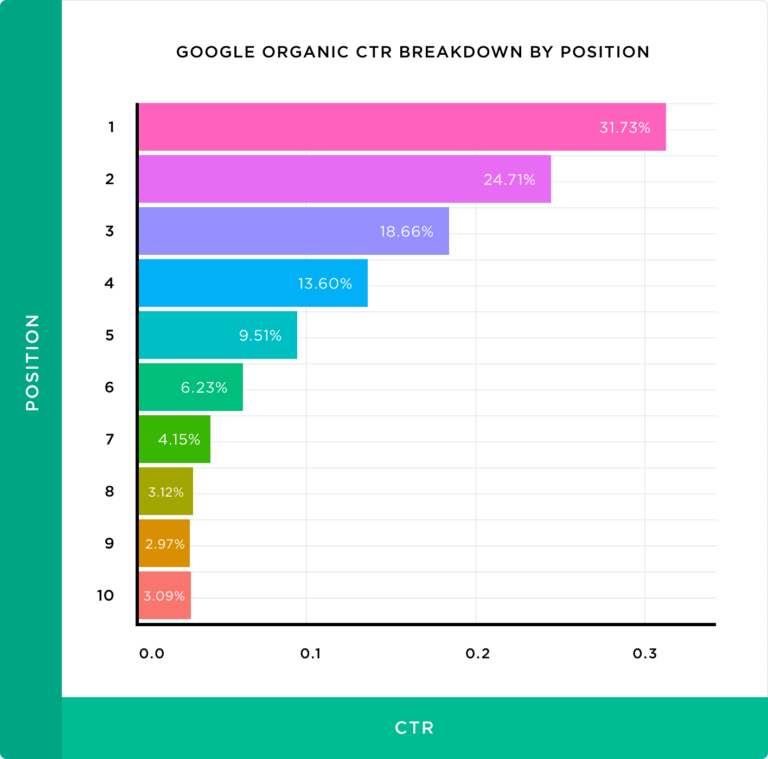

In “Chapter 2: Preparation,” based on studies analyzing millions of searches and billions of results, it was seen that the higher a result ranks, the more users select it.

Almost one third of clicks on a query will go to the No. 1 position.

The 2nd position will take slightly fewer, and the further down a result is, the lower its conversion rate becomes.

Studies also show the 1st result is ten times more likely to be selected than the 10th.

Additionally, positions 7–10 have roughly similar selection chances, while each higher position—especially among 1–7—offers about 30% more likelihood of a click (fig. 5.2).

Figure 5.2: CTR and ranking position in Google results - Backlinko.

Users behave similarly across both small devices (smartphones, tablets) and large devices (desktops, laptops).

The studies also indicate that after the 10th result—where by default the page flips from the first to the second—the conversion rates are very low and roughly the same for all results.

Hence the familiar quip: “the best place to hide a body is page two of Google Search results.”

Therefore, for an online presence, it is essential both to appear in Google Search results and to climb as high as possible—ideally to No. 1—so pages receive traffic from Google queries.

As a result, every online presence—and especially any personal or professional website—should first be made known to search engines (submission), and second be monitored for performance in them, so fixes can be made to gain higher rankings.

Next are some tools and basic methods and methodologies for better managing and optimizing a website’s presence on Google Search, with extensions to other search engines where recommended.

The logic that applies to Google Search largely applies to the rest.

Submission and monitoring

Suppose a new website has been planned and organized, with a defined business goal, a sound information structure, useful content (text, images, videos, etc.), and it has been built by a professional who delivered an excellent result.

Congratulations.

Now what?

How can the site become known and attract traffic to get the first online customers?

The primary way visitors access a site is via its URL, so the question is where prospective visitors can find and choose the site’s URLs.

There are many answers.

Post on social media like Facebook, Instagram, Twitter; share the address via email to a prospect list; list the new online presence on websites that already have visitors and invite them to visit; or even hand out cards with the site address.

The most important thing, however, is for the pages to be in the database of the major search engines, chiefly Google.

Until now, Google knew nothing about the new site, so an introduction is needed.

The best way to introduce it is via Google’s powerful tool: Google Search Console (search.google.com/search-console).

Google Search Console provides tools to manage websites relative to the Google Index, crawling, and appearance/performance in Google Search results.

It offers tools to understand how Google “sees” and “understands” the site, alerting for significant errors, omissions, and failures, and—most importantly—the ability to submit a new set of pages or an entire website to its indexes.

To start, click “Start now” on the Console’s home and sign in with Gmail.

From the main dashboard, choose “Add new property” to declare a new property—website (fig. 5.3).

Figure 5.3: Submitting a website to Google via Search Console.

Next, verification is required so Google can be sure the website belongs to the submitter.

This is usually done by uploading a file to the server folder hosting the website, or by adding a small snippet of code to the website’s home page, or by registering specific DNS records on the server—handled either by the hosting provider or the site’s developer.

Ownership verification is performed once per owner; Google then begins sending its Googlebots to the site’s files to analyze and evaluate them for inclusion in its results going forward.

After submission, Googlebots will begin scanning the pages, starting from the home page and following all links. However, there is a more appropriate way to submit all pages: Sitemaps.

Sitemaps are a standard practice in website creation—files listing all URLs of a site and possibly some extra info (e.g., last modified time for each URL).

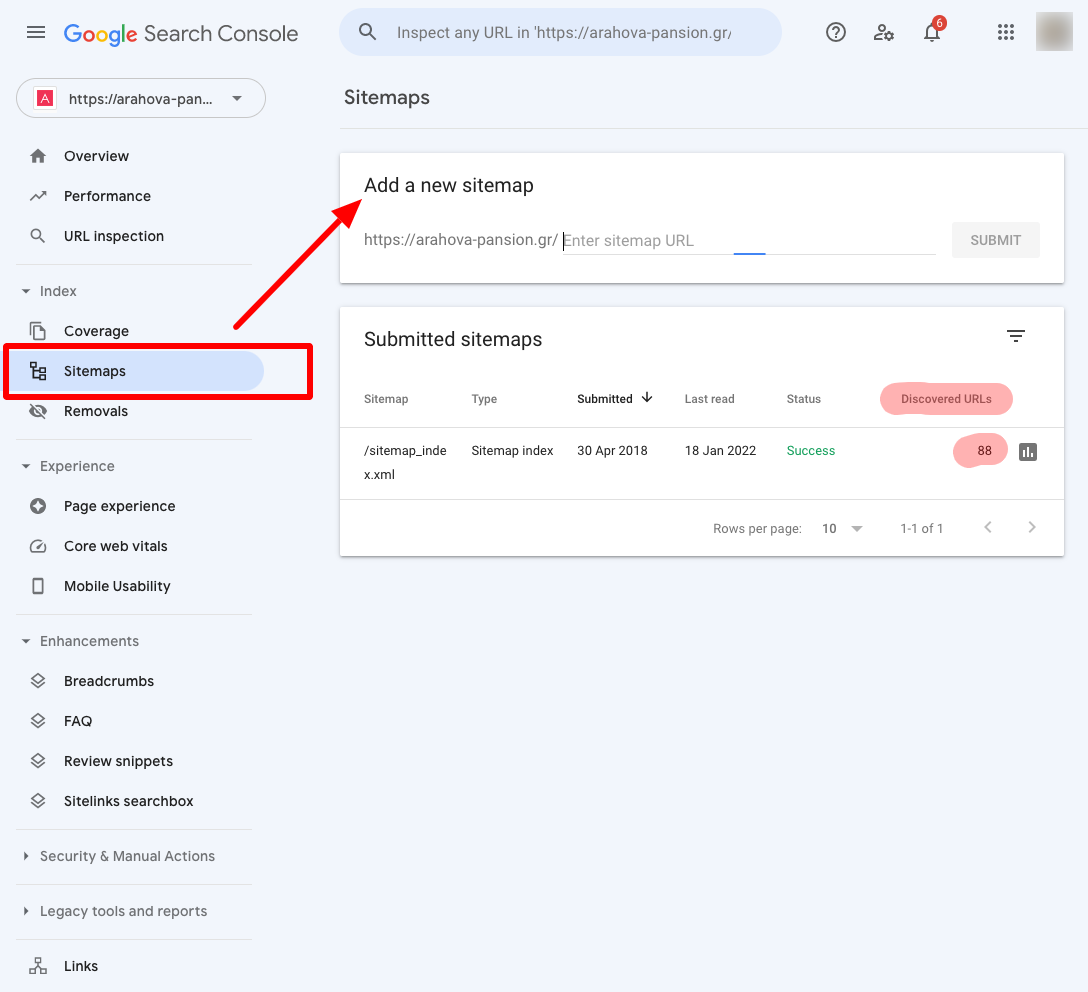

If the website has (and it should have) an address where its Sitemaps are located, the Sitemaps can be submitted from the Console’s Sitemaps section (fig. 5.4).

In the same area, submitted Sitemaps can later be viewed and managed, as in the example below where the file sitemaps_index.xml was submitted on April 30, 2018, successfully, and 88 URLs were retrieved.

Figure 5.4: Submitting sitemaps in Search Console.

That’s it!

The first and essential step of submitting the site to the Google Index is done.

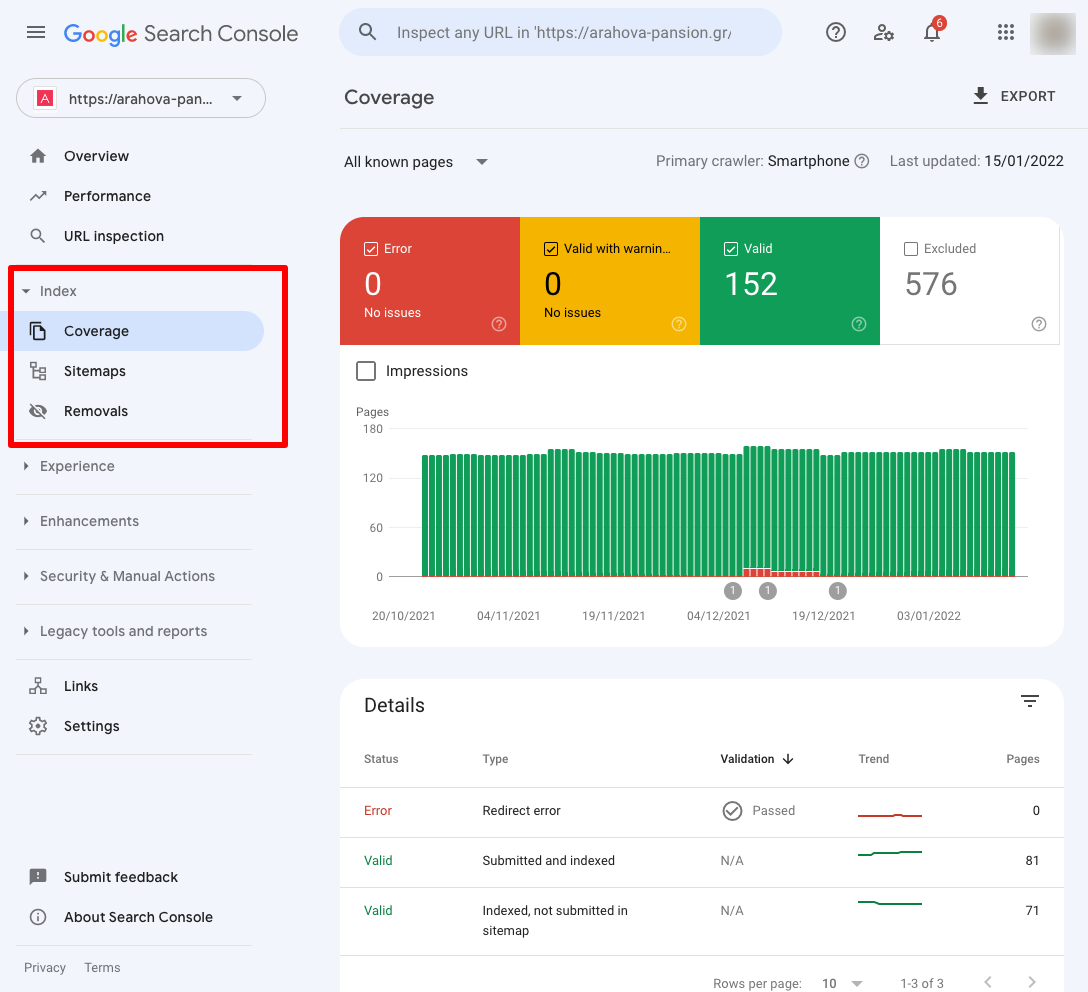

Now, the main area of interest is Index → Coverage, where the number of URLs Google knows for the site can be seen, with statuses such as Valid, Error, Valid with warnings, and Excluded.

At this stage, ensure Valid pages are the correct ones and there are no Error or Warning URLs—details appear beneath the chart (fig. 5.5).

Figure 5.5: Index coverage in Search Console.

Now there’s visibility into which URLs Google knows and their statuses.

After successful submission in Search Console, Google will begin analyzing and understanding the pages’ purpose and value, and soon include them in results.

Through Search Console, a site owner can monitor which pages appear most, for which queries, at what ranking positions, in what formats, on which devices, from which countries visitors come, and much more.

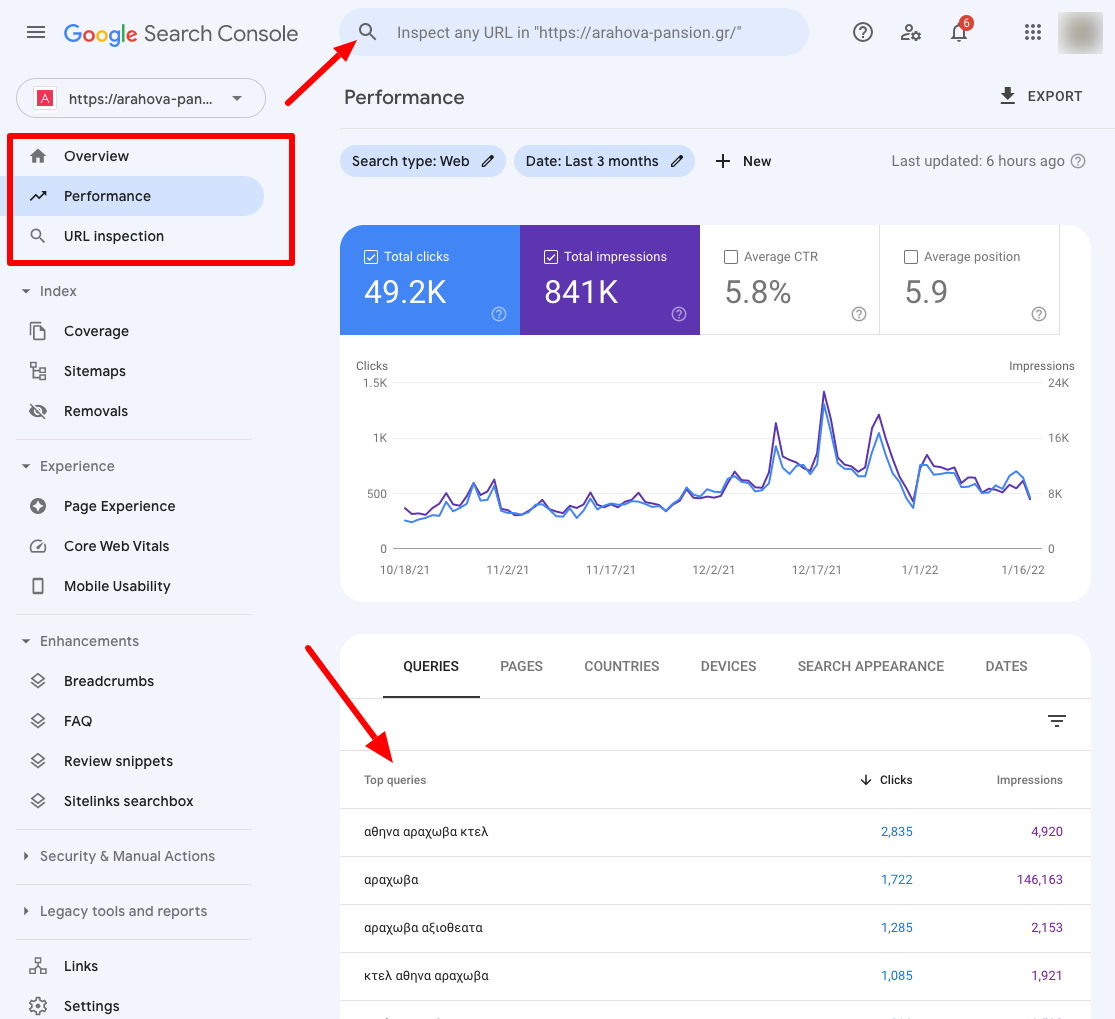

The core area is Performance (fig. 5.6).

There, overall site traffic metrics from Google Search are shown; in the sample below, in the last three months the site received 49,200 clicks from Google Search results and appeared (Impressions) 841,000 times.

The average CTR (click-through rate) is 5.8% (for every 100 impressions, there are 5.8 clicks), and the average position is 5.8.

The table below the chart is crucial, containing data on the queries where the site appears, the pages receiving clicks, etc.

At the top, there’s also an option to enter a specific page URL to view detailed information for it.

Figure 5.6: Performance panel in Search Console.

This panel is fantastic; beyond the data’s reliability (it is practically the only tool with perfectly accurate info since it’s tied to Google Search), it offers very useful features.

A frequent use is to select which queries pages appear for and at what rate, to understand user interest and search patterns, and then to see where the result ranks and whether anything should or could be done to improve it.

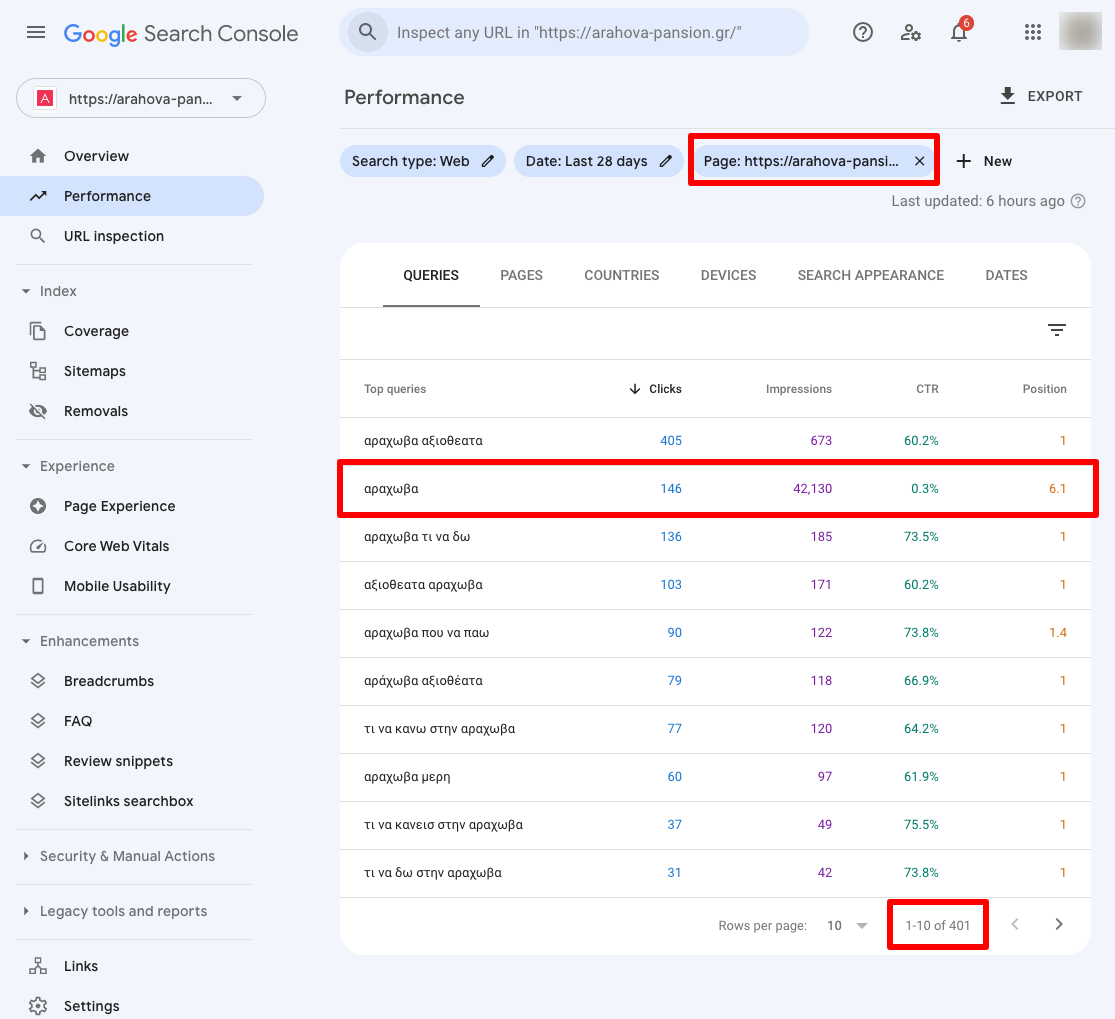

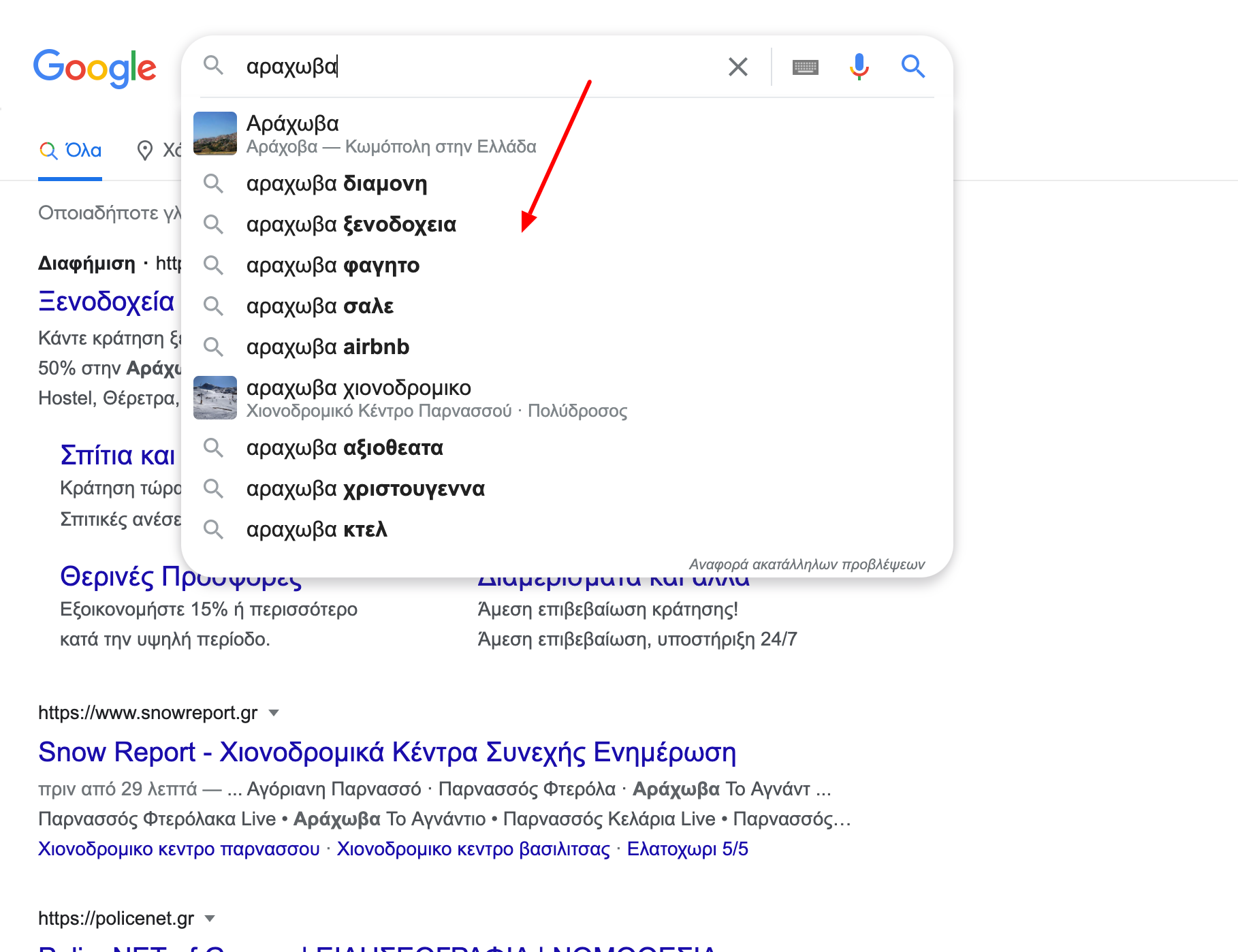

In the example below (fig. 5.7), a specific page (on activities in Arachova) shows that users who select it search terms like “arachova attractions,” “arachova what to see,” “arachova where to go,” “arachova what to do,” etc.

Specifically, the page appeared for 401 different queries. Typically, visitors first type “arachova” and then combine other words, which—as above—fall under informational queries.

There is also the simple query “arachova,” which gets interesting.

Figure 5.7: Performance panel in Search Console.

For the simple term “arachova,” there is a disproportionately large search volume and high interest: the page appeared 42,000 times but only 146 users clicked it, despite an average position of No. 6—not too low to perform so poorly.

The term “arachova” is very broad and the intent behind it is unclear: is someone searching for a hotel (transactional), travel directions or weather (informational), or the Arachova municipality site or a business named “Arachova” (navigational)?

Initially, it may not even be desirable for the page to appear there; if the main intent is navigational, even clicks would likely not find what they want, bounce, and needlessly load the server.

Nevertheless, regardless of intent, the click-through rate is low.

Reasons might include a poor page title as it appears in results, or other modules in the results capturing user attention better.

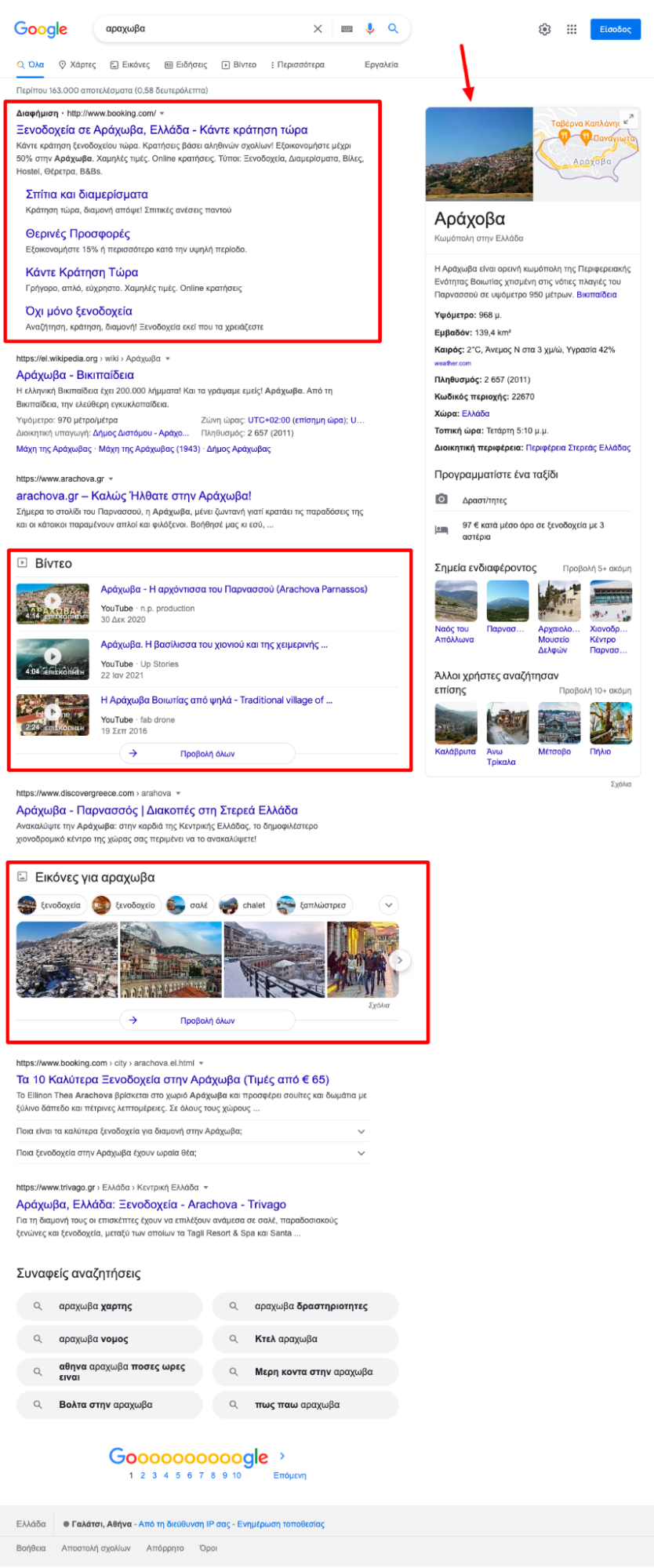

In such cases, run the same search in a private window (Windows/Linux/Chrome OS: Ctrl+Shift+n; Mac: ⌘+Shift+n) so results aren’t personalized by cookies/history (fig. 5.8).

Seeing the results, one can understand the reasons for low performance and whether it’s worth pursuing that query: there is a large ad block at the top; to the right, Google’s knowledge panel with general Arachova info like elevation, population, etc.; Wikipedia and the Arachova municipality appear among the top results; and there are video and image slots.

Figure 5.8: Search for “arachova” in private session (02/2022).

Inevitably, that page stands little chance.

At the same time, it becomes clear that the generic “arachova” query gets generic answers likely mismatched to the site’s business goal (accommodation).

Therefore, for now that query and its results cease to be a concern.

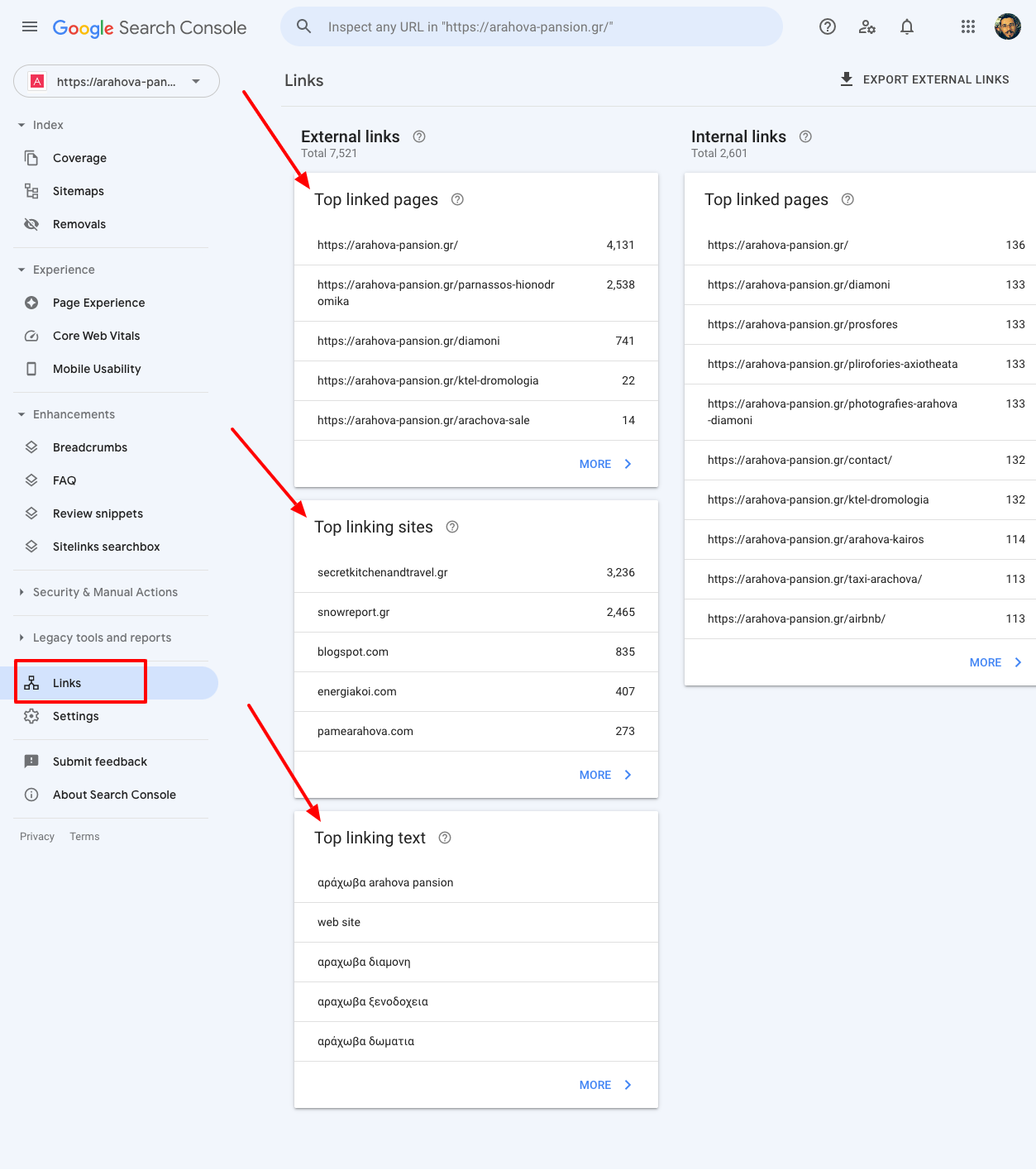

Finally, a panel worth checking is Links, where inbound links from other sites and their target pages can be found (fig. 5.9).

Figure 5.9: Links panel in Search Console.

Since many, diverse, high-quality links from reputable sites are a signal to Google that the destination pages and site have value—and thus should rank higher—the Links section is quite important.

Typically, the most targeted, linked pages also have the highest traffic and the greatest appearance in Google Search results. Therefore, the number and quality of referring sites and the number of distinct pages they link to are critical (better 1,000 links from 100 popular sites to many pages than 10,000 links from 10 low-quality sites to a single page).

Google Search Console has many more features—anyone can discover and use them as needed—but for quick monitoring, the areas above are sufficient. Even non-experts can get a basic picture of how a site performs in Google Search.

Tools

Monitoring an online presence’s performance can be done with proper use of suitable tools (free or paid) for search engine results.

Most premium tools maintain historical records of results across thousands of queries and log inter-page links to build a basic profile for each site.

In practice, the most important thing to know is proper use of Google Search itself.

For most non-professional or small professional presences, this will suffice, since most premium services target SEO professionals.

Google Search (free).

Almost everyone uses Google Search daily on computers or smartphones.

In reality, only a small portion of its very useful features are known.

To get default, depersonalized results (not based on search history or cookies), open Google Search in incognito/private mode.

This is done, as noted earlier, on Windows/Linux/Chrome OS with Ctrl+Shift+n and on Mac with ⌘+Shift+n. Alternatively, from the File menu choose New Incognito Window. This is also a good way to avoid ad retargeting or to get a better price from, say, an airline.

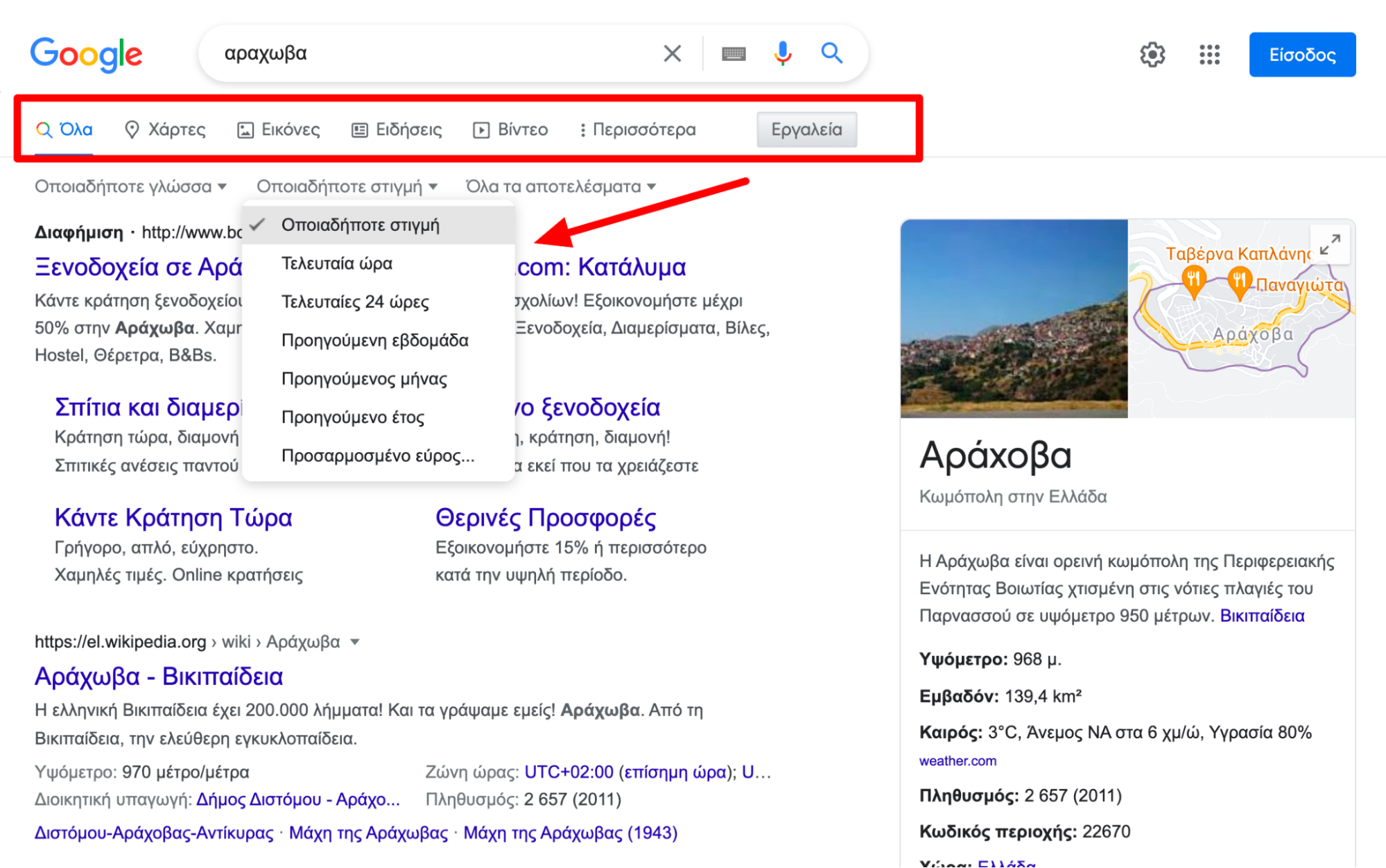

A very useful feature is the tabs toolbar right under the search bar (fig. 5.10).

There are options for images, news (results specifically from news sites), videos, etc.; in Tools, language and time published can be set (pages published in a specific timeframe)—useful for tracking a query and seeing new pages and information.

Figure 5.10: Google Search toolbar.

Before the toolbar, there is another useful feature: autosuggest.

This is the menu shown while typing a query, based on similar searches by other users—very useful even when organizing a site’s structure and content (fig. 5.11).

In the example below, the core sections for “Arachova” according to Google Search are accommodation, hotels, food, chalets, Airbnb, ski resort, attractions, Christmas, and KTEL buses (many already exist as sections on the Arachova site).

Figure 5.11: Suggestions while typing in Google Search.

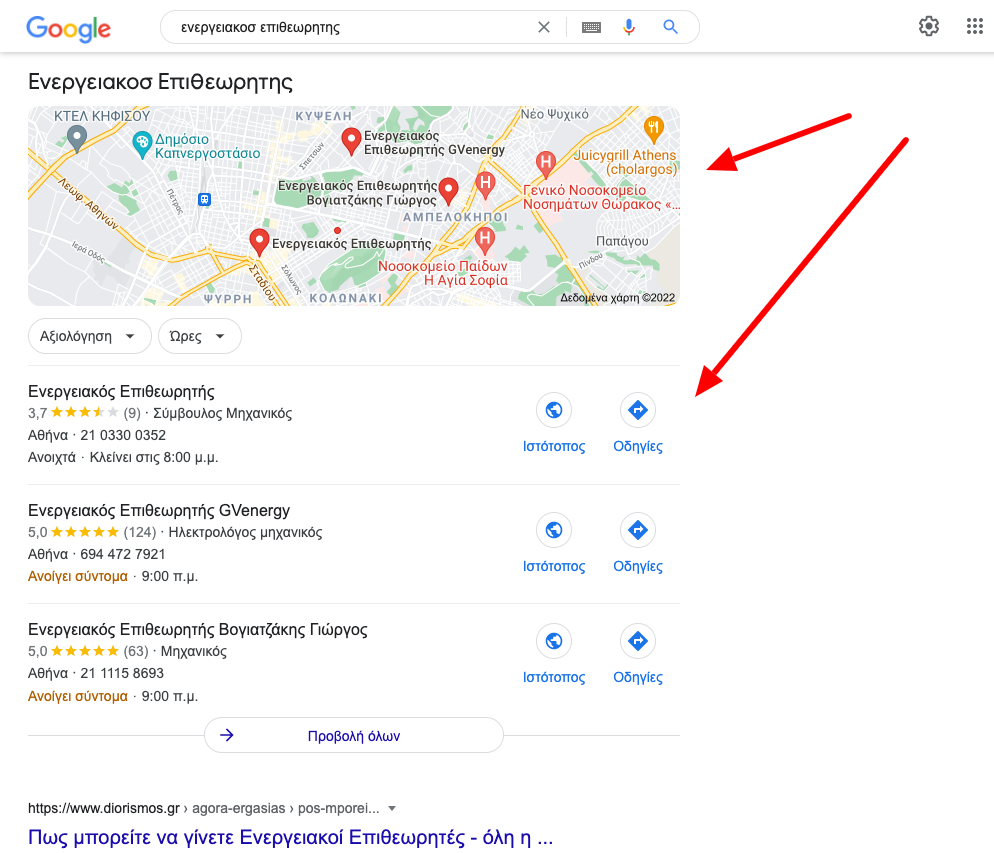

Another key element in Google Search results to recognize is the Knowledge Graph and maps.

The Knowledge Graph is a set of authoritative information Google provides for a result, such as the right-hand box titled “Arachova” with population, elevation, etc., in fig. 5.10.

With it, the nature of the query can be inferred—usually informational or navigational.

Maps are a great opportunity for a brick-and-mortar business to rank high via Google My Business (fig. 5.12).

Figure 5.12: Business suggestions via Google Maps/Google My Business in Google Search.

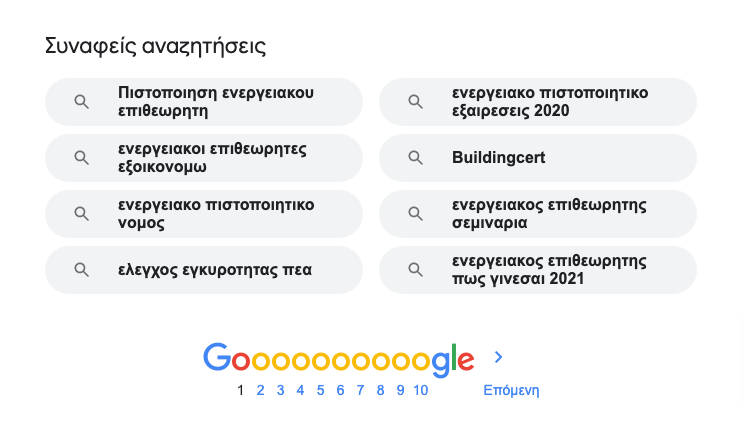

Another element often used to understand related information to a query—and whether it can be answered on a page—is Related searches (fig. 5.13).

Related searches can yield answers and ideas about adjacent topics and easily guide understanding of the intent Google perceives—extremely important!

In the example below for “energy inspector,” results include those relevant to existing and prospective inspectors (certification, seminars, how to become, buildingcert) and those about issuing energy certificates and the “Exoikonomo” subsidy program (subsidy, law, PEA check, exceptions).

Figure 5.13: Related searches in Google Search.

Beyond these useful features, specific search operators are often helpful.

Quotes (“ ”) force exact phrase matches.

For example, searching athletic tracksuits for kids returns content with those words in any order; “men’s athletic tracksuits” returns results with that exact phrase.

A hyphen (-) before a word excludes pages containing that word.

For example, arachova -food returns results without “food.”

site:example.gr with or without an extra word returns results only from example.gr—very useful for competitor checks.

For example, διαμονη site:onparnassos.gr shows all pages Google knows from competitor Onparnassos related to accommodation.

An asterisk (*) between words returns results with anything between them.

For example, arachova * food returns results like traditional food, good food, arachova livadi food, etc.

OR between two terms returns results satisfying either term.

For example, arachova hotels OR arachova ski resort returns results with either hotels or the ski resort. AND between terms returns results containing both.

Searching any word with “near me” returns results based on current location.

Finally, intitle:example returns results with “example” in the page title.

It’s a fact that Google Search and proper use of it are extremely powerful and can provide great value both in creating and in monitoring a site’s performance.

Proper queries and analysis of Google Search results are the alpha and omega for site success.

Google Search Console (free).

Search Console’s capabilities and monitoring were discussed above.

Its per-page and per-query monitoring capabilities are practically inexhaustible.

One can find the highest-traffic pages, those with the highest CTR, the average CTR, historical (up to 16 months) performance for pages and queries, CTR and average position, changes in search volumes over time, performance by device and location (country), how many pages are in the Google Index and crawl frequency, etc.

It can also show appearance types (standard result or rich result), how user-friendly the site is regarding speed, stability, and interactivity (Core Web Vitals), and more.

Most of the above mainly interest SEO professionals; however, anyone concerned with site performance can glean useful information from Search Console and improve presence in search engines.

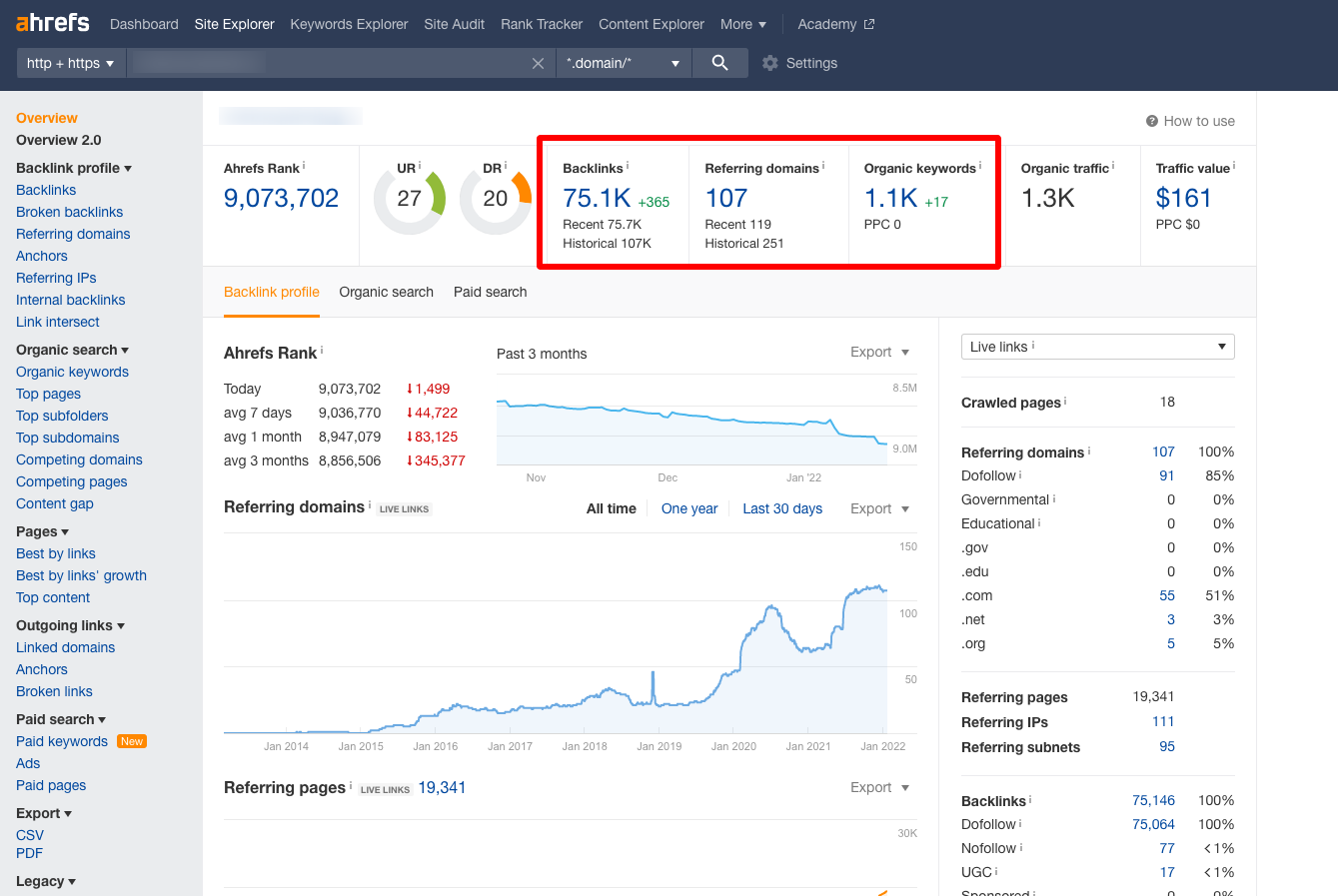

Ahrefs (subscription).

Ahrefs.com is one of the most renowned SEO tools, used by major companies and professionals.

It has reliable data and a huge database of backlinks and Google Search results—useful both for tracking an owned site and for analyzing competitors.

Figure 5.14: Ahrefs.com dashboard.

It also recognizes and analyzes competitors, suggests content gaps between a target page and its competitors, and, a favorite use, finds broken links—pages of interest with non-functioning links, potentially an opportunity to replace with links to a site’s pages.

Finally, it provides an estimated click volume for each keyword—excellent given that over half of searches end in zero clicks.

It’s a powerful toolset and almost essential for professionals working on SEO.

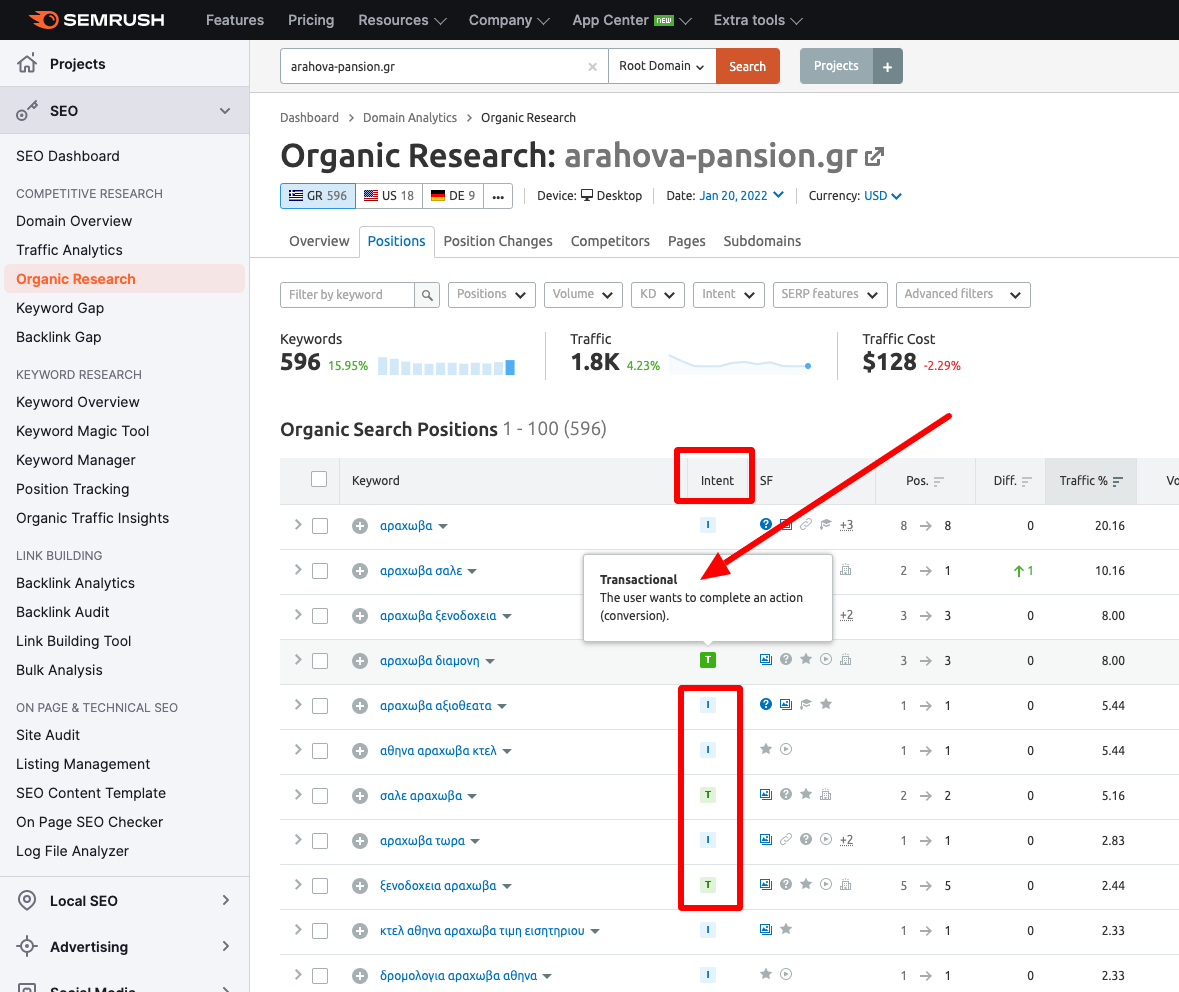

Semrush (subscription).

Semrush.com is equally well-known and high-quality.

Functions like tracking ranking positions over time, categorizing queries by intent (fig. 4.30) like informational/transactional/navigational, and on-page site audits for code and content quality are excellent.

Figure 5.15: Semrush query-intent categorization.

Like Ahrefs, Semrush targets large companies and professionals managing and improving third-party sites.

Ubersuggest (free & subscription).

Ubersuggest is also a good tool, free with some paid features.

Several functions and the logic of discovery in Ubersuggest were seen in the previous chapter on competition.

Core features—keyword ideas, site checks for gaps and issues, and competitor analysis—are sufficient for basic use when starting out.

Moz (subscription).

Moz.com is a premium tool similar to Ahrefs and Semrush. It includes site audit functions, tracking and historical ranking data, query and backlink analysis, etc.

However, its database isn’t as extensive and is focused on the U.S.

Anyone seeking a solid internet presence today must know the basics of how search engines work, which remain the primary source of referrals to the pages and sites best serving a query.

For small—even professional—sites and individual pages, deep knowledge or premium subscriptions aren’t necessary.

Good understanding of Google Search capabilities and perhaps simple use of Google Search Console will be enough to see which queries bring visitors, their intents, what content to add, or which pages get no traffic and should be retired.

It will also yield valuable competitive intelligence useful both online and offline.

Monitoring site performance in search engines, beyond managing and optimizing the site itself, provides valuable insights about trends or fluctuations in search traffic volumes that precede any other actions (e.g., transactions).

Of course, someone just starting an online presence needn’t monitor search performance daily. Occasionally, a brief weekly review is enough to get a picture.

The more serious, professional, and large a presence becomes, the more time, energy, and resources it will require in these areas.

It is no coincidence that all large companies relying on the digital environment for survival employ professionals whose job is to monitor and improve what’s needed based on search engine guidance.

Search engines rank results with complex algorithms to best answer questions.

That resulting knowledge can be used to offer better services, deliver better answers, and ultimately gain more customers and followers for what is created and offered.

Things to keep in mind:

- Web search is one of the most popular ways to find a website.

- There are three basic query categories: transactional, navigational, informational.

Structure the site around the core intent of visitors. - Submit and monitor the site in Google Search Console.

It will help get to know visitors better—and help Google get to know the site better.

Cover image source: Unsplash.com